A/B (or multivariate) testing is a widely adopted optimization technique used by marketers and technologists which purpose is to fine-tune a digital property (web page, email, etc.) to produce the best possible outcomes. It’s a data-driven approach to ensuring well-informed decisions are being made. From design and calls-to-action, to headline copy and product photography, there’s endless ways to leverage A/B testing.

Conducting A/B tests allows brands to put variations of the same web page head to head to help determine which is the highest performing. Whether it is conversion rate, engagement, or any other test parameter pertinent to your business, the goal is implementing incremental improvements through data analysis and hypothesis.

A/B testing is not just for the major brands with tons of traffic. With off-the-shelf software, smaller players with a reasonable amount of traffic are still able to conduct useful tests. This said, the more traffic available, the more statistically significant the results will be.

Let’s see if A/B testing is the right fit for you.

A/B testing work by validating (or invalidating) a hypothesis you’ve made about an aspect of your design or digital marketing tactics. Here’s the anatomy:

Control. The control is the starting point. The constant. The ‘A’. This is what you’re putting up to battle first and then measuring the other variants against.

Variant. The variant is the opposition. It’s your alternate version of ‘A’. AKA the ‘B’.

Additional Variants. You may run several variants at a given time.

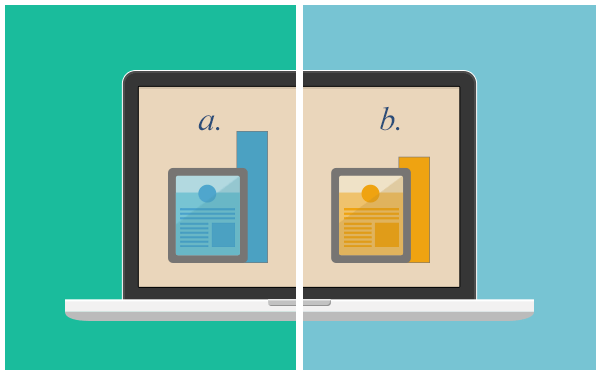

Let’s use the following diagram from Optimizely to explain:

‘A’ is the web page on the left - the control. ‘B’ is the web page on the right - the variant. Can you spot the test? The test is trying to determine if changing the “BUY NOW” button colour from grey to red will result in more action.

So we have the test parameters locked in. We would then put up both versions live at the same time, send 50% of the web traffic to version A and 50% to version B. Wait until statistical significance is achieved (more on this later), analyze the results, and implement the changes based on the options that drives the highest number of add-to-cart actions.

It’s important to understand and distinguish the difference between A/B testing and multivariate testing. The two are commonly confused as being interchangeable:

A/B is measuring the difference in performance between two separate web pages with a single change.

Multivariate is testing the effectiveness of different elements within a single web page.

To put your assumptions to the test, you need to ensure that have enough data for the test results to be deemed accurate (ie. not just a fluke). You need to be confident in your results to implement whatever change might be necessary. To acheive this, you will have to deal with confidence intervals and advanced statistical analysis. Bleh.

Thankfully(!) there are tools out there at our disposal and software that make this math heavy part a lot easier than it used to be. As an example, let’s look at how much web traffic (sample size) and time you’d need to be able to run an effective and accurate A/B test.

Using an online calculator, if one of your landing page currently converts at 2%, and you want to be able to detect changes in performance that are greater than 20% (+ or -), you’ll need 20,000 visits to each web page variation. That's 40,000 visits.

In other words, if your page gets 2,000 visits per day, after twenty days of testing you can be 95% confident that our results are accurate. With a fairly low traffic site that sends 100 visits per day to that page, the same test would take months to complete.

As you can see with the calculator, you’re able to change any part of the equation. If you convert at a higher rate, only want to be able to detect big changes (like 50% variances), and are more lenient with your level of confidence, the same A/B tests can be carried out a lot faster depending on your traffic levels.

If you operate a very low traffic website, don’t be discouraged, there are methods that could work for you as well. Here’s a post on it.

Although there are seemingly endless ways to A/B test your web pages or digital marketing tactics, here are some some ideas to get you started:

CTA Buttons. Try several variations of your call-to-action (CTA) button design. Test different shapes, design, drop shadows, gradients, arrows, underlines, verbiage, typography, font size, placement, colour and exclamation marks!

Related Product Recommendations. Your cross-selling, “You may also like”, suggestions should also be A/B tested. Test the verbiage by trying, “Frequently bought with”, and try using different product groupings and photography.

Column Position. If your site has a column on its product page or shopping cart page, swap the column position from left to right and test the changes in effectiveness and engagement.

Ads. A/B test your adwords or display ads. You should be testing your headlines, descriptions, landing page URLs, titles, landing page designs, and display images.

Forms. Always be testing your form design. Test the length, design, field description text, input type, and pop up vs on-page. Read more on designing forms for mobile to boost conversions.

Product Imagery. You should be testing product angles, lighting, models, model gender, model position, illustrations vs real life photography, distance vs zoomed, product colour, and anything else you can think of unique to your product line.

Copy. After you create buyer personas and begin to understand your customer. Test your web copy. Switch up the way you word headlines, the length of your paragraphs, bulleted list vs numbers, bold vs non-bold, colloquialism, tone of voice, brevity, and product descriptions.

Mobile specific components. Test mobile specific aspects of your web pages. Navigation styles, length of copy, size of imagery, button size, location personalization, device specific offers, iOS vs Android specific functionality, and click to call buttons.

When you have reliable data and have determine which variant is the top performer, implement the winner and continue to test. A/B testing is about incremental improvement.

For example, when your new product image results in a 20% boost of your CTR. Make that new product image your control, create a new experiment, test, achieve significance and repeat.

In the end, A/B testing is an extremely effective tool if you conduct the tests correctly and take action based on the results. Thankfully, there’s awesome tools available to you today that make the process much easier. And even make A/B testing more accessible for low traffic web sites.

The Jibe Multimedia, Inc. © 2009-2025